Looking to master the art of web scraping? Beautiful Soup is the answer! This Python library effortlessly extracts and parses data from HTML and XML files.

In this article, we’ll guide you through the basics of web scraping, introduce you to Beautiful Soup, show you how to import the required libraries, extract and parse data, tackle advanced techniques, store scraped data, and share best practices.

Stay tuned for a comprehensive overview and hands-on tips to enhance your web scraping skills!

Key Takeaways:

Contents

- Beautiful Soup simplifies web scraping by providing Pythonic idioms for data retrieval and modification.

- It is essential to understand web scraping and import necessary libraries before using Beautiful Soup.

- For clean and maintainable code, consider using Beautiful Soup’s advanced techniques for handling different page structures and formatting data.

Introduction to Beautiful Soup

Beautiful Soup, a Python library, is a powerful tool used for web scraping and parsing HTML and XML data. Inspired by Lewis Carroll’s ‘Alice’s Adventures in Wonderland’, Beautiful Soup helps developers extract data from web pages effortlessly.

One of the key features of Beautiful Soup is its ability to navigate the parse tree, allowing users to search for specific elements, extract information, and modify the document structure with ease. It provides support for different parsers, enabling flexibility in handling various types of markup languages.

- Another notable aspect is its robustness in handling poorly formatted HTML, making it a go-to choice for scraping data from websites with inconsistent or messy code.

- Furthermore, Beautiful Soup simplifies the extraction process by converting complex HTML documents into a navigable parse tree, streamlining the scraping workflow for developers.

Understanding Web Scraping

Web scraping is the process of extracting data from websites using automated tools such as Beautiful Soup in Python. It involves parsing the HTML and XML structure of web pages to gather specific information.

Web scraping has become essential for businesses, researchers, and developers seeking to extract valuable insights from the vast ocean of online data. By utilizing Beautiful Soup and Python, users can automate the retrieval and organization of data, saving time and effort.

The ethical implications of web scraping cannot be ignored. It is crucial to respect the terms of service and website policies when scraping data, ensuring that the process is conducted ethically and legally.

HTML and XML play a vital role in structuring web content, providing a framework that web scrapers can navigate to extract the necessary data efficiently.

Introduction to Beautiful Soup

Beautiful Soup, a Python library known for its simplicity and effectiveness, offers a comprehensive solution for parsing HTML and XML documents. Drawing inspiration from Lewis Carroll’s ‘Alice’s Adventures in Wonderland’, Beautiful Soup simplifies the process of navigating through the HTML tree structure.

By traversing the HTML or XML file, Beautiful Soup allows you to extract specific data with ease. It provides a high-level interface for automatically converting incoming documents into Unicode and outgoing documents into UTF-8. The library creates a parse tree that can be used to navigate and search for tags, attributes, and data. This dynamic tool is a game-changer for developers and data scientists looking to scrape information from websites efficiently.

Getting Started with Beautiful Soup

To begin working with Beautiful Soup, Python developers need to install the library and understand the basics of parsing HTML and XML data. Getting started with Beautiful Soup involves importing the necessary modules and initiating the parsing process.

After successfully installing Beautiful Soup, the next step is to import the required libraries. Python developers can do this by including the following code:

- from bs4 import BeautifulSoup

- import requests

These libraries are essential for fetching the HTML content and initiating the parsing process. Once the libraries are imported, developers can start by creating a BeautifulSoup object, passing in the HTML content to be parsed.

Importing Required Libraries

Before diving into web scraping tasks, developers must import essential libraries like Beautiful Soup in Python. The installation of Beautiful Soup ensures access to a wide range of functions for parsing web content.

Beautiful Soup is a powerful Python library that simplifies the process of extracting information from web pages. Its user-friendly syntax allows developers to navigate through HTML and XML documents effortlessly, making it a popular choice for web scraping projects. To install Beautiful Soup, users can use pip, the default package installer for Python. By running the command ‘pip install beautifulsoup4’ in the terminal, developers can quickly set up this library and start utilizing its numerous features.

One of the key functionalities offered by Beautiful Soup is the ability to parse and extract specific elements from HTML content, such as retrieving text, finding links, or locating specific tags. This parsing capability streamlines the process of extracting relevant data from websites, saving developers valuable time and effort. Additionally, Beautiful Soup supports different parsers, including ‘html.parser’ and ‘lxml’, allowing users to choose the most suitable option based on their project requirements.

Extracting and Parsing Data

The core functionality of Beautiful Soup lies in its ability to extract and parse data from HTML and XML documents. By utilizing Beautiful Soup’s parsing methods, developers can navigate the document structure and retrieve specific elements.

One of the key methods in Beautiful Soup is the find() method, which allows developers to locate the first occurrence of a specific tag or class within a document.

The find_all() method is useful for retrieving all occurrences of a particular tag or class, enabling developers to collect multiple elements at once.

Beautiful Soup also offers the select() method, which uses CSS selectors to target elements, providing a flexible way to extract desired data from web pages.

Searching and Retrieving Elements from a Page

In web scraping tasks, the process of searching and retrieving specific elements from a page is streamlined with Beautiful Soup. Developers can utilize various search methods provided by Beautiful Soup to locate and extract relevant data.

One common method is using tag names to find elements on a webpage. For instance, searching for all ‘p’ tags to extract paragraphs or ‘a’ tags for links. Beautiful Soup also allows developers to search by attributes, such as class or id, making it easier to pinpoint specific elements. Developers can navigate through the HTML tree using methods like find_parent() or find_next_sibling(), enabling them to access related elements efficiently.

Advanced Beautiful Soup Techniques

Beyond basic parsing, Beautiful Soup offers advanced techniques to handle complex HTML tree structures effectively. Understanding these advanced methods enables developers to extract data from intricate web page layouts.

One of the key features is the ability to navigate the HTML tree and pinpoint specific elements using various searching methods. Whether it’s finding elements by class, id, or other attributes, Beautiful Soup provides developers with the flexibility to target and extract data precisely.

Developers can utilize advanced parsing techniques to handle nested structures and irregular HTML formatting, ensuring accurate data extraction from even the most challenging web pages.

Dealing with Different Page Structures

Adapting Beautiful Soup to handle diverse page structures is essential for successful data extraction.

Developers can utilize the powerful features of Beautiful Soup to effectively navigate through complex HTML layouts and extract data with precision. For instance, when dealing with tables, Beautiful Soup offers methods like find_all('table') to isolate tabular data and extract specific rows or cells. When encountering nested div tags, developers can leverage Beautiful Soup’s recursive parsing capabilities to access nested elements and retrieve relevant information efficiently.

Storing Scraped Data

After extracting data using Beautiful Soup, developers need to store the scraped information efficiently. Proper formatting and cleaning of the extracted data ensure that it is organized for further analysis and usage.

One essential step in storing scraped data is establishing a standardized format. This involves converting the extracted information into a structured form, such as a database or CSV file. By doing so, developers can easily access and manipulate the data. Additionally, cleaning the data is crucial to remove any inconsistencies, duplicates, or irrelevant content. This process ensures that the stored information is accurate and reliable, facilitating smoother analysis.

Formatting and Cleaning Data

Post-extraction, formatting, and cleaning procedures are crucial to prepare the data for analysis. Beautiful Soup users can apply formatting techniques to ensure consistency and cleanliness in the extracted information.

Once the data has been extracted using Beautiful Soup, the next step involves standardizing the structure and refining the content. This can be achieved through techniques such as removing HTML tags, eliminating duplicate entries, and normalizing text formats.

Developers can leverage Beautiful Soup’s functionalities to handle irregular data by transforming it into a more organized format. For instance, stripping extra whitespaces, converting text to lowercase, and dealing with special characters are common procedures in data cleaning with Beautiful Soup.

Best Practices and Troubleshooting

Adhering to best practices while using Beautiful Soup is crucial for efficient web scraping operations. Developers should also familiarize themselves with common troubleshooting techniques to overcome potential challenges during data extraction.

One key best practice in utilizing Beautiful Soup is to structure your code in a clear and organized manner. This helps in maintaining readability and makes it easier to debug if issues arise. It is recommended to use Beautiful Soup with compatible parsers like lxml for improved performance. When encountering issues, developers can refer to the official documentation to find solutions for common problems such as incorrectly formatted HTML or issues with element selection.

Writing Clean and Maintainable Code

Maintaining clean and well-structured code is essential for long-term development projects involving Beautiful Soup. By following best practices, developers can ensure their code is readable, maintainable, and efficient.

When working with Beautiful Soup, it’s imperative to maintain a clear structure within the codebase. This not only enhances the readability for yourself and fellow developers but also fosters easier maintenance and troubleshooting down the line. By utilizing consistent naming conventions, proper indentation, and meaningful comments throughout the code, you can effortlessly navigate and modify the scraping scripts whenever needed.

Adhering to coding best practices such as breaking down complex tasks into smaller, manageable functions, and modularizing the code can significantly improve code maintainability. Encapsulating specific functionalities in separate functions or classes not only simplifies the code logic but also promotes code reusability and scalability in future enhancements.

For instance, instead of having a monolithic scraping script, consider segmenting the code into separate functions handling different parsing tasks like extracting specific elements or navigating through the website structure. This approach not only makes the code more organized but also allows for easier debugging and unit testing of individual components.

Reviewing What We’ve Learned

After exploring the functionalities of Beautiful Soup, it is essential to review and reinforce the key concepts learned. Reflecting on the features and capabilities of Beautiful Soup enhances understanding and retention.

One of the fundamental aspects of Beautiful Soup is its ability to parse HTML and XML documents, providing a convenient way to extract data from web pages. Utilizing the tree-based parsing system, users can navigate the document structure effectively to locate specific elements or content. Beautiful Soup offers powerful methods for filtering and manipulating parsed data, such as find(), find_all(), and select(), enabling users to streamline their scraping process efficiently.

Understanding the difference between parsers, like HTML parser, LXML parser, and XML parser, allows users to choose the most suitable option based on their specific requirements. The flexibility and robustness of Beautiful Soup make it an invaluable tool for scraping data from websites with complex structures.

Conclusion and Next Steps

Mastering Beautiful Soup enables developers to extract valuable data for sales and marketing strategies.

After gaining proficiency in using Beautiful Soup for web scraping, individuals can explore advanced techniques to enhance their data extraction capabilities. Incorporating CSS selectors, navigating complex HTML structures, and handling dynamic content are some of the advanced skills that can be honed.

Understanding the ethical implications of web scraping is essential for maintaining credibility and staying compliant with data protection laws. Respecting website terms of service, avoiding overwhelming servers with excessive requests, and obtaining consent when accessing personal data are key ethical considerations to be mindful of.

Python First Match

Python First Match is a concept in Python programming that refers to the initial occurrence of a specific pattern or value within a dataset. Understanding Python First Match is essential for efficient data processing and analysis.

When working with large datasets, Python First Match serves as a powerful tool to locate the first instance of a particular pattern or value. By utilizing built-in functions such as re.search() for regular expressions or index() for lists, programmers can swiftly pinpoint the earliest occurrence. This capability proves invaluable in scenarios like text parsing, where identifying the first occurrence is crucial for subsequent operations.

Python First Match is widely used in data cleaning tasks, where handling duplicates or anomalies requires isolating the initial appearance of specific data points. For instance, when processing a CSV file, being able to quickly identify the first occurrence of a unique identifier enables efficient data deduplication and error correction.

Python Mutable vs Immutable Types

Python Mutable vs Immutable Types present fundamental distinctions in how data is handled and modified in Python. Understanding the differences between mutable and immutable types is crucial for efficient programming and memory management.

Mutable types in Python, such as lists and dictionaries, allow for changes to the object after creation. When a mutable object is modified, Python updates the original object instead of creating a new one. This can make it easier to manipulate data directly in memory, but it also requires caution to avoid unintended changes.

On the other hand, immutable types like strings and tuples cannot be altered once they are created. Any attempt to modify an immutable object results in the creation of a new object. While this may seem less flexible, it ensures data integrity and prevents unexpected side effects.

Pandas Read Write Files

Pandas Read Write Files functionality in Python enables seamless handling and manipulation of various file formats within a Pandas DataFrame. Mastering Pandas Read Write Files is essential for efficient data processing and analysis.

One of the key strengths of Pandas is its ability to read and write data in different formats, such as CSV, Excel, SQL databases, and more. This versatility makes it a powerful tool for managing diverse datasets. When using Pandas to read a file, you can load the data into a DataFrame, allowing for easy manipulation and analysis.

For instance, when dealing with a CSV file, you can use Pandas to read the data into a DataFrame using the read_csv() function. Similarly, to write data back to a file, you can leverage functions like to_csv() for CSV files or to_excel() for Excel files.

Flask by Example Implementing a Redis Task Queue

Implementing a Redis Task Queue in Flask showcases a powerful approach to background task processing and management. Exploring Flask by example with a Redis Task Queue implementation enhances efficiency in handling asynchronous tasks.

Integrating Redis with Flask involves setting up Redis as the messaging broker for handling tasks. First, ensure you have Redis installed and running on your system. Create a new Flask app or use an existing one where you want to implement the task queue. Next, install redis and flask extensions to facilitate communication between Flask and Redis. Define the tasks you wish to execute in the background within your Flask application.

One key benefit of using a Redis Task Queue is the ability to perform time-consuming tasks asynchronously without impacting the main application’s performance. This is particularly useful for tasks like sending emails, processing large data sets, or handling periodic maintenance tasks.

Pygame: A Primer

Pygame: A Primer serves as an introductory guide to Pygame, a popular Python library for game development. Exploring Pygame fundamentals and functionalities is essential for aspiring game developers.

Pygame is known for its simplicity and versatility, making it a preferred choice among beginners and experienced developers alike. With Pygame, developers can easily create graphics, handle user input, and implement game logic effectively.

The library provides a range of built-in functions and modules that streamline the game development process, allowing developers to focus on creativity and gameplay rather than boilerplate code. Pygame’s robust documentation and active community further enhance its appeal by offering ample resources and support to users at all skill levels.

Invalid Syntax Python

Invalid Syntax Python errors occur when the Python interpreter encounters code that does not conform to the language’s rules. Understanding how to troubleshoot Invalid Syntax errors is crucial for identifying and rectifying coding mistakes.

One common reason for Invalid Syntax errors is missing parentheses, brackets, or quotation marks in the code. For example, forgetting to close a bracket or using single quotes instead of double quotes can result in this type of error. Python’s error messages often provide clues about the specific line where the issue lies, allowing you to pinpoint and fix the error efficiently.

To handle Invalid Syntax errors effectively, it’s important to double-check the syntax of the problematic line and ensure that all necessary punctuation marks are correctly placed. Using an integrated development environment (IDE) that highlights syntax errors can aid in early detection and prevention of such issues.

Here is an example of troubleshooting an Invalid Syntax error in a Python script:

-

- Code snippet:

| print(“Hello Python”) | # Missing closing parenthesis |

-

- Error message: SyntaxError: unexpected EOF while parsing

- Resolution: Add a closing parenthesis to the print statement:

| print(“Hello Python”) | # Corrected syntax |

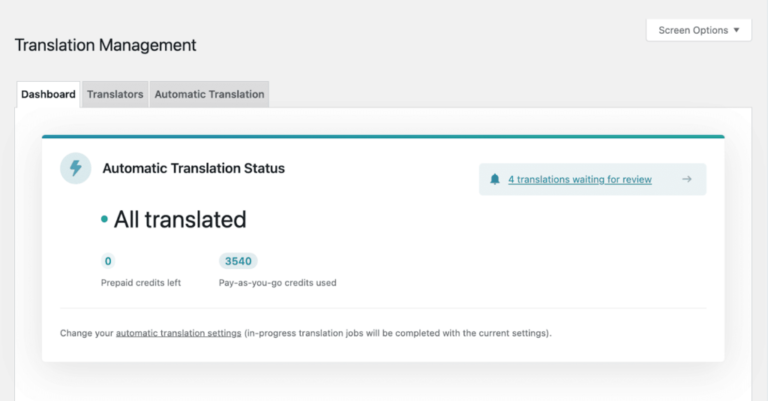

Translating This Documentation

Translating This Documentation into different languages can enhance accessibility and reach for a wider audience.

Exploring translation options and tools for this documentation ensures effective dissemination of knowledge. By translating technical content, you can cater to a diverse global audience, breaking down language barriers and expanding the impact of your work.

When considering multilingual documentation, it’s crucial to utilize Python and Beautiful Soup, powerful tools that can streamline the translation process. Python’s versatility and libraries like Beautiful Soup excel in parsing and extracting content, making them ideal for automating the translation of technical materials.

Frequently Asked Questions

What is Beautiful Soup?

Beautiful Soup is a Python library that makes it easy to extract data from HTML and XML files, using Pythonic idioms for navigating, searching, and modifying the parse tree. It is a popular tool for web scraping and data extraction.

How does Beautiful Soup work?

Beautiful Soup uses an HTML or XML parser to create a tree-like representation of the document. It then provides a simple interface for navigating and searching this tree, allowing you to easily extract the desired data.

What makes Beautiful Soup different from other web scraping tools?

Unlike other web scraping tools, Beautiful Soup is specifically designed for working with HTML and XML files. This makes it more efficient and reliable for extracting data from these types of documents.

Can Beautiful Soup handle malformed or messy HTML and XML files?

Yes, Beautiful Soup is designed to handle poorly formatted or messy HTML and XML files. It can automatically correct errors or inconsistencies in the document, making it a valuable tool for data extraction.

Is Beautiful Soup suitable for beginners?

Yes, Beautiful Soup is a beginner-friendly library that is easy to learn and use. The Pythonic idioms it provides make it a user-friendly tool for those new to web scraping and data extraction.

Is Beautiful Soup free to use?

Yes, Beautiful Soup is an open-source library released under the MIT license. This means it is free to use for both personal and commercial projects.